A Tech Policy Design Playbook was developed and published as the culmination of our work with the World Wide Web Foundation and 3x3 on Deceptive Design, and includes guidance on how to run community co-design engagements around tech policy design. Superbloom, in partnership with Dr Carolina Are, facilitated three community sessions around the risks to “high risk” content creators and being de-platformed from essential social and content platforms.

Dr Carolina Are, reached out to Superbloom to leverage and test the Tech Policy Design Lab Playbook: “How To Run A TPDL” in a series of three workshops aimed to improve the design and policies of social media platforms through centering the needs of marginalized communities, journalists/activists, sex educators and adult content creators. These content creators are regularly at risk of de-platforming and shadow banning which is when they lose access to their social media account(s) e.g. TikTok, Instagram, Twitter/X, via malicious “crowd-sourcing”; an example of which is when those in online communities rally others within the community to have someone’s account deleted by reporting the profile and/or account.

Making space for high-risk identities when building policy

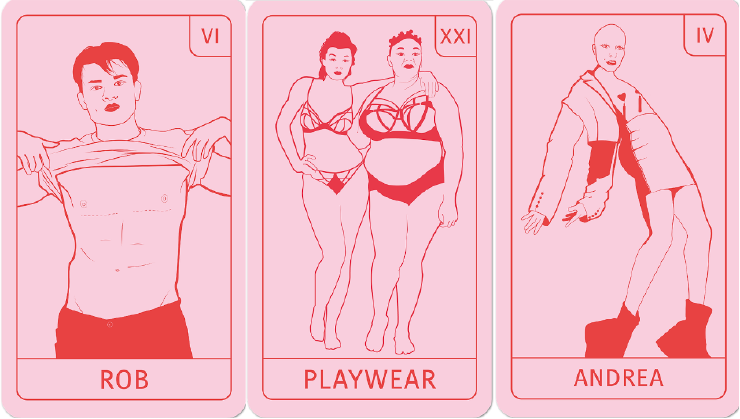

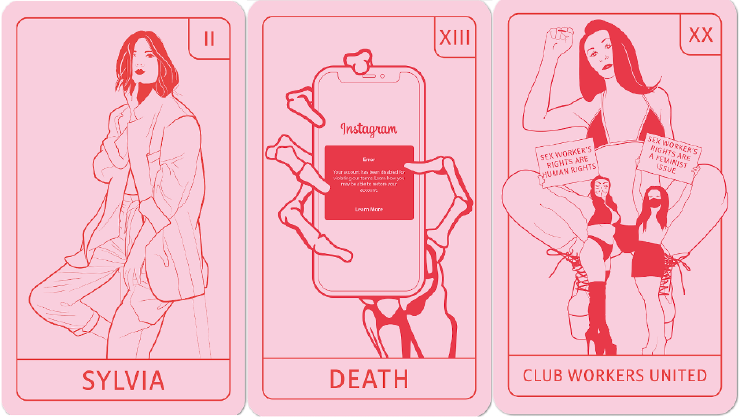

Participants in the first of three workshop groups were individuals who identified across the LGBTQIA+ spectrum and had experiences of their content being censored, mass-reported or taken down due to the LGBTQIA+ related-content. The second was a group of varied adult content creators ranging from lingerie makers, painters, photographers, pole dancers and sex-workers. These individuals are very aware of the rules for banned content on social media platforms and often find their content is removed even when it complies with a platform’s rules. The most devastating effect on adult content creators is that they often rely on these platforms for promotion as they are a vital source of income generation.

The third and final group were people involved in activism and journalistic work that use social media platforms to spread and gather information about human rights cases globally. This work is often difficult due to platform censorship of different “violent” and/or “sensitive” information and they are frequently “mobbed” by those who want to suppress certain voices and perspectives. This is usually exercised through the malicious mass reporting of posts.

The workshop groups were provided with information and scenarios in advance of the workshops, and invited to take part in breakout group activities to propose solutions to some of the main challenges in social media’s governance of their content types. These workshops use a sex positive framework to re-design platform governance. Participants didn’t require previous knowledge of design or specific technologies to participate in the workshops, however; as we often find when facilitating workshops, those who attend these workshops have vast and varied levels of comfort with tech, design and policy topics.

The main concerns for high-risk content creators

“…Content moderation often fails to take the human experience into account to prioritize speed and platform interests, lacking in the necessary empathy for users who are experiencing abuse, censorship, loss of livelihood and network as well as emotional distress. Indeed, being de-platformed from social media often leaves users unable to access work opportunities, information, education as well as their communities and networks – and research has found this has adverse mental health and wellbeing impacts”.

Dr Carolina Are’s report “Co-Designing Platform Governance Policies” courtesy of the Moderation Arcana project website.

The overwhelming frustrations expressed by all workshop attendees were that those woh configure, manage and govern current social media platforms are not always representative of identities such as theirs (LGBTQIA+, adult content creators and human rights activists/journalists). This is apparent through the very design of the platforms and policies which govern them (e.g. reporting processes that overwhelmingly punish certain identities). Although the three workshop groups were separated by content specifics and context, all had a shared purpose. Each has a perspective and voice to share, and they are providing essential information e.g. journalists and desired services e.g. adult content, creative expression arts, and are punished by the platform’s design for speaking out or for simply sharing their profession or identity. Often they are punished by the very systems put in place to protect them. Here sits the complex contradiction of platforms made to facilitate the ability of ordinary citizens to share and voice opinions (social media), where the politicization of certain “risky” content can arise from those who seek to censor, whether it be a platform or the citizens themselves who decide that certain content should not be allowed to be shared on platforms because of their own moral standpoint.

These marginalized identities and content creators went on to further state that they yearn for platforms created by and for people like them, LGBTQIA+ folks, Sex Workers and Activists but lamented the fact that creating these platforms has been attempted in the past to only marginal success. This marginal success then pushes people back to the mainstream platforms in order to reach others; like-minded communities, newly exploring LGBTQIA+ folks, people who pay for adult services and those who can benefit from hearing activist/citizen journalist news over the mainstream media. These mainstream platforms have a hold over these marginalized communities due to their popularity and prevalence. The existence of marginalized identities on these platforms is a constant high-risk dance between the lines of censorship and being banned from the platform.

“It’s a constant anxious battle. Instagram is always terrifying, and anxious and nerve-wracking and there’s never any positive feelings around Instagram. It is always inadequacy, “Oh my goodness, I’m not posting enough”, or “Oh my God, if I don’t post everyday then my followers are going to drop”, and of course, “If I post every day, I’m more likely to get shadow banned or blacklisted or deleted, and have posts removed.”

Workshop participant

Many participants spoke to the business and revenue increasing-focus of many platforms, stating that they felt that while from a content perspective they were not wanted on these platforms, the platforms couldn’t deny the business revenue that they bring. They expressed the sense of deliberate tenuous co-existence with high-risk content from the platform’s perspective; that they were wanted there for the viewer traffic, but were not invited to make the platforms safer and more stable places for them.

The greatest sense of stability for these content creators came from three places:

- Being in community and connecting with similar types of content creators.

- Discussing and equipping themselves with knowledge of platform policies and national and international content laws.

- Being able to have an “inside person” working at these platforms.

All participants felt a sense of injustice about working with these methods; that being in community often means being at risk of competition or being more easily identified by citizen “online mobs” or platform moderators. Equipping yourself with knowledge requires the time to find and understand frequently complex policy language, and having an inside person or employee connection is down to luck.

Ways we want to see platforms change policies to ensure content creator safety

It was unanimously agreed that content creators at risk of shadowbanning and de-platforming want explicit and clear inclusion in the decision making processes that platforms use when making content reporting and account take-down policies. This includes better internal knowledge and training for social media platform staff, but also platform endorsed training for these kinds of content creators. This comes with the need for specific and dedicated communication lines with platform staff and content creators. Without content, these platforms do not have an audience. The symbiotic nature of all kinds of content, and that the platforms rely on humans creating and posting content was made clear in our discussions, and that platforms currently lean towards an authoritative approach to their platform.

The Superbloom workshop facilitation team also saw the benefit of equipping individuals with background knowledge ahead of policy workshops such as these. All come into these spaces with different levels of understanding and access. Efforts to equalize these levels are tricky and usually done by sending reading materials and resources ahead of time. In itself this is a polarizing action, given that many may not have the time or background to parse that information ahead of a workshop. This is why human-understandable policy information must be created to empower individuals to speak about their needs with regard to the platforms they frequent.

In discussing the users who seek to harm others using the systems that platforms have put in place for safety e.g. mass-reporting, we advocate for a method that Superbloom utilizes when working with the risk of “bad actors” or people and/or structures that seek to censor. We call these Personas Non Grata. These archetypes are ways of modeling behavior that harms others, whether deliberately or not.

In summary, in facilitating the three workshops, we learned there must be more consultation with those who create “high-risk” content in order for all public platforms and tools to better include all users of the internet in a way that’s safe for everyone.

You can read more academic papers from Carolina Are in her research portfolio.