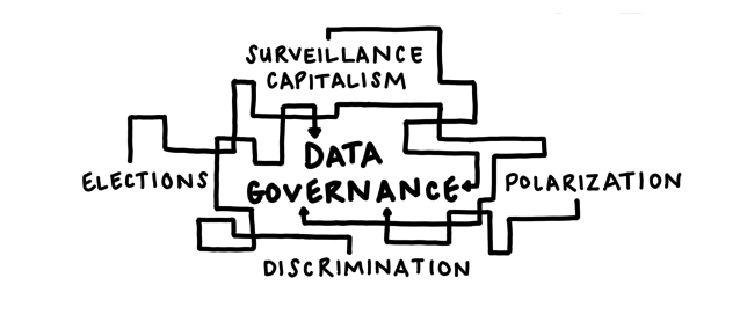

Algorithmic personalization affects everyone, whether we know it or not. It impacts the way we get our news, how we shop, and, concerningly, our mental health. By determining what we read, algorithms shape our worldview. Most web users know little about the underlying technology that powers these algorithms, in part because they are proprietary trade secrets closely guarded by their companies.

One proposal to shift the power dynamic is to support people to learn more about their “filter bubble” (a term coined by Eli Pariser to refer to the highly selective snapshot of the internet that people see). If more users safely share some of their data, researchers can investigate these personalization algorithms based on lived experience. This idea — people sharing their data for research — is frequently referred to as “data donation”.

Though data donation is being used to explore interventions in decoding this closely guarded technology, it poses usability challenges as the concept is frequently counterintuitive. People might reasonably think that the best way to protect themselves from the influence of these inscrutable algorithms is to shield their data, not share it. For one thing, donated data — without strong privacy protections — could be directly linked to individuals, and there is no good way for people to know exactly what they are giving up.

Before you enthusiastically endorse a data donation program, you need to do some homework. Here are some key questions to keep in mind:

- Can users be confident that the data collectors (e.g. researchers, application developers, organizations) have good intentions? Are the risks worth it? It’s important to think about these questions even if collectors’ credentials and affiliations seem trustworthy.

- Who owns the donated data? What can the data be used for? For how long? Then what happens?

- Can these intentions and promises be verified and enforced?

- What will users know about the research and how will they agree to it? Could it be standardized? For example: I share x data for y reason with the oversight of z group.

- Would the findings drawn from the donated data serve everyone, or just a small percentage of people? Not everyone is equally likely to donate data, so how can we ensure we aren’t excluding the most vulnerable groups of society?

How can we design for data donation?

In February 2020, we hosted a workshop with the ALgorithms.EXposed (ALEX) team. The ALEX team is building browser extensions that enable people to learn more about how they are targetted by algorithms. As part of the workshop, our goal was to find out if people are interested in the idea of donating data about their lived experience online and how we can provide a safe and transparent experience to do so. Our [workshop report](/resources/S implySecure-ALEX-Workshop-Report.pdf) outlines our key findings in detail. Here are four key guidelines for data donation design in 2020 based on these findings:

1. Transparency: Ensure participant awareness in every step of the process

Complete transparency is key to giving people the information they need to make informed choices. People need to know about the investigators, the research, the risks, and the future of their data. Participants’ comfort with sharing data depends not just on the goal, but also on the approach, especially the clarity and honesty of the communication around data sharing.

2. Consent: Uphold participant control

Informed consent is complicated — participants need to understand what their data is and where it’s going. But how can you collect informed consent without becoming a burden to the user? By offering a variety of controls, like reminders, repetition, and customization, people can choose the control model that works for them.

3. Consistency: Reinforce trust by relying on standards of interaction

Understandably, most people are unable to evaluate the trustworthiness of a system’s code, so will often rely on the visual and interaction design. The trust that comes from a standardized visual identity contributes to trust in the technology and organization.

4. Delivery: Involve participants in the process

Users are looking for an experience that gives back, whether through tools, resources, updates, results, or recognition. Simply being involved in the process can be an excellent means for people to educate themselves about the security and privacy of their data.

As part of a community of researchers passionate about unveiling the mysteries of corporate algorithms, we want to give power back to the people. Reverse-engineering through data donation has a lot of potential to foster transparency and understanding. But as UX designers, developers, and researchers, we must do more than just support the idea of data donation; we must actively design better data processes. The issues of privacy and security of data are more important than ever, and we hope that with well-designed flows, everyone can start to see past the edges of their own filter bubbles.