My previous posts (part one and part two) explored what phishing attacks are and ways that designers can help prevent their products from becoming a target. In this post, I’d like to examine some more technical countermeasures. If you’re a designer interested in fighting phishing, this can be useful background information, and it can help prepare you for discussions with your more technical teammates. I also hope this post will highlight that current technical solutions alone are not enough to help users fight phishing.

How browser companies fight phishing

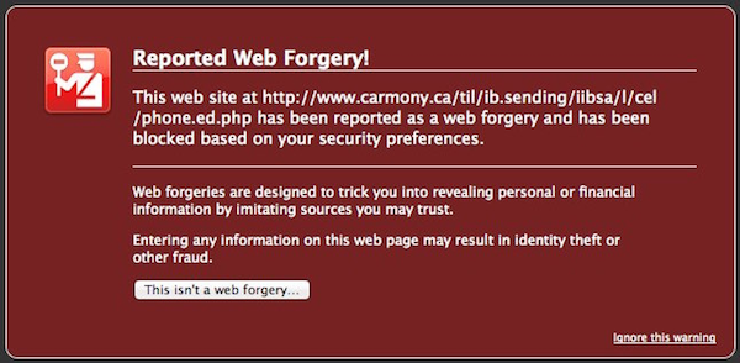

Web-browser companies work hard to fight phishing. Services such as the Safe Browsing initiative provide a continually updated catalog of probable phishing sites and help users of Chrome, Firefox, and Safari avoid them. These browsers pop up a warning message when users navigate to a site in the catalog. Some anti-virus companies provide software that performs a similar function. These services work best against phishing sites that have been around for a few hours or days but are less effective for ones that just launched or that target a limited number of high-value users (such as the spear phishing attacks I described in my first post).

An example of Firefox’s phishing warning for a site that was registered on the Safe Browsing blacklist. Adapted from this image by Paul Jacobson, which was released under a CC BY-NC-SA 2.0 license.

An example of Firefox’s phishing warning for a site that was registered on the Safe Browsing blacklist. Adapted from this image by Paul Jacobson, which was released under a CC BY-NC-SA 2.0 license.

In considering the browser’s efforts to protect users, one common misconception is that the lock icon in the URL bar communicates the authenticity of a website. For example, some people might think that a lock next to a URL containing the word “Amazon” means that you’re viewing a page legitimately owned by Amazon.com. In fact, the lock symbol is meant to convey whether the connection between your computer and the web server is encrypted. It’s entirely possible for the creator of a phishing site to set up encryption on a bogus site, so relying on the presence of a lock icon alone can’t keep you from falling for an attack.

While reassuring, a lock icon in the URL of a browser does necessarily mean that the site in question is legitimate.

While reassuring, a lock icon in the URL of a browser does necessarily mean that the site in question is legitimate.

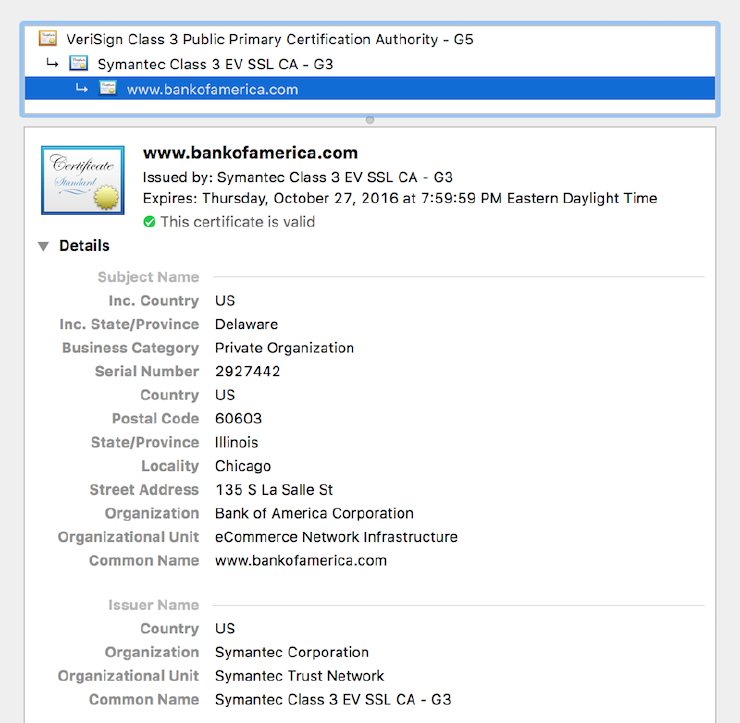

Although the lock itself isn’t necessarily meaningful in the fight against phishing, the information you get when you click on it can be if you know what to look for. For most modern web browsers, clicking on the lock will show you security details about the site, including information about its SSL certificate. This certificate includes information about the organization’s name, its location, and what website(s) are affiliated with it. In theory, these certificates are only issued to an organization after a certification authority such as Let’s Encrypt or Entrust verifies these aspects of its identity. When the identify-verification process works well, it means that someone pretending to be Amazon.com Inc. and located in Seattle, WA will be prevented from getting an SSL certificate tying their website to that company’s name and location.

The SSL certificate I received when viewing Bank of America’s website. It is issued by the Symantec Corporation’s “certification authority” and has a specific assurance level.

The SSL certificate I received when viewing Bank of America’s website. It is issued by the Symantec Corporation’s “certification authority” and has a specific assurance level.

In practice, the process can be very messy and subject to corruption or subversion. This was the case in 2011 when the webmail of up to 300,000 Iranians was compromised after a certification authority was hacked. By issuing fraudulent SSL certificates, the attackers were able to more accurately impersonate domains such as gmail.com, compromise a number of Iranian users’ credentials, and spy on them. Even sophisticated users were fooled.

When the classic certificate-based system fails, there are newer lines of defense such as key pinning and certificate transparency. Key pinning is a browser feature to verify that the SSL certificates for a company