This is the first in a short series of posts looking at Facebook’s “Privacy Checkup” feature. This installment examines why even privacy advocates who avoid social-media sites should take time to understand it and related user experiences. The next installment will go into depth critiquing the feature itself, taking lessons from the user experience that are useful to any designer of privacy or security-related software.

As a reader of the Simply Secure blog, chances are good that you spend a fair amount of time thinking about privacy and data security. If you use programs like Tor Browser, Privacy Badger, or Signal, you might express your personal data-privacy goals with statements like “I don’t want anyone to be able to follow what I do online,” “I want to be able to control my online data and metadata as much as possible,” or “I don’t want any companies or governments spying on me.”

While people who don’t use these types of programs may have spent fewer hours searching out vetted open-source software, that doesn’t mean that they don’t care about their data privacy. The Pew Research Center has found that “91% of American adults say that consumers have lost control over how personal information is collected and used by companies,” and that “the public has little confidence in the security of their everyday communications.”

One thing that Ame heard during her recent study in New York City is that participants were concerned that they were being surveilled by the police through Facebook. Although we need no external validation or justification of their concerns to find meaning in their lived experience, there is tremendous evidence that their fears are justified – as reported by The Verge, The Guardian, and other outlets.

The mix of social media and conspiracy statutes creates a dragnet that can bring almost anybody in. – Andrew Laufer, as quoted by The Verge

Of course, some privacy advocates would tell people with these concerns that privacy is anathema to sites like Facebook because their revenue model is largely based on gathering data about users and selling targeted ads. And, depending on your threat model and the security practices of the social-media site in question, it’s true that sharing your data with such sites can put your security at risk – both online and in the physical world – especially if you are an activist under threat from state actors.

But there is no single definition of what is “private enough.” Everyone has different threat models. And as a user advocate with a human-centered-design ethos, I argue that it is not reasonable to simply tell a billion or more people that they should abandon the platforms they use to communicate every day with friends and relatives – that they use to buy used clothing for their children, get inspiration for their creative endeavors, and hunt for job opportunities. The value that users get from these sites is too high; a message to leave it based on amorphous privacy threats would fall on closed ears.

We should instead make sure that users have the tools they need to manage who can see their data, and work to understand the ways that sites share data outside of the user-visible platform. It sounds like the New York City police are not able to obtain warrants that provide them access to large swaths of the local population’s accounts (although I welcome correction on this point). This means that helping users control who sees their data shared through the platform – and an increased focus on helping individuals detect phony friend requests – could go a long way toward protecting the participants Ame talked to who were concerned about unwarranted police surveillance.

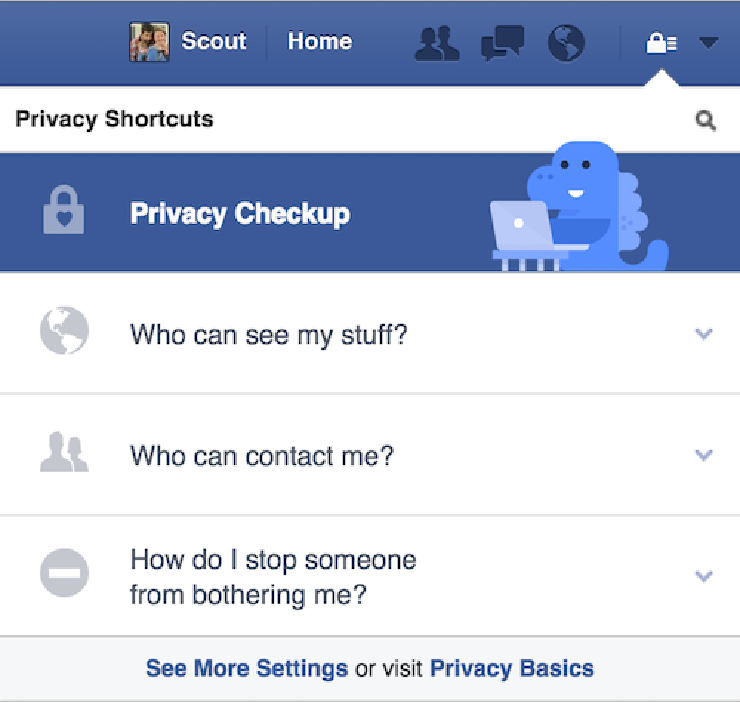

This is why features like Facebook’s “Privacy Checkup” hold great potential. The best way to understand how well it works for users in practice would be to look at Facebook’s usage statistics (How many users complete the checkup? What changes do they implement as part of the process? Do they engage more with their privacy settings than users who don’t interact with the feature? Do their sharing behaviors change after completing the checkup?) or perform a user study (Do users understand what is going on? Are they happy with the results of the checkup when they’re complete? What about six months later? Do they feel that their data is safer? Do they work with friends or family to help them protect their data?). But, we’re going to be scrappy and do an armchair expert review – the kind of analysis that is easy to perform on any piece of software after using it for a short amount of time. This kind of review is most useful for identifying low-hanging fruit – i.e., obvious things that may confuse or frustrate users.

Stay tuned for the next post in this series, where we’ll start taking the feature apart and identifying lessons that are useful to any designer of privacy or security-related software.

Screenshot of Scout’s Facebook account, taken today.

Screenshot of Scout’s Facebook account, taken today.