If you’re coming to the study of security and privacy from another field, it can sometimes be tough to get a clear answer to what seems like a simple question: Is this app secure? However, if you’re working on the user experience for that software, it’s critical that you understand the assumptions that security experts are making about your users and their behavior – and not just take the experts’ word that all is well.

I’ve written before that neither security nor usability are binary properties. In a nutshell, this means that there’s a lot of gray area when it comes to deciding whether something is secure or insecure. Security enthusiasts often come at the problem by answering one question: Is this the most secure solution available? Meanwhile, non-experts are actually asking a different one: Is this secure enough for what I want to do? Like usability, security is defined as a function of a particular set of users and their needs – more specifically, as a function of the threats they face.

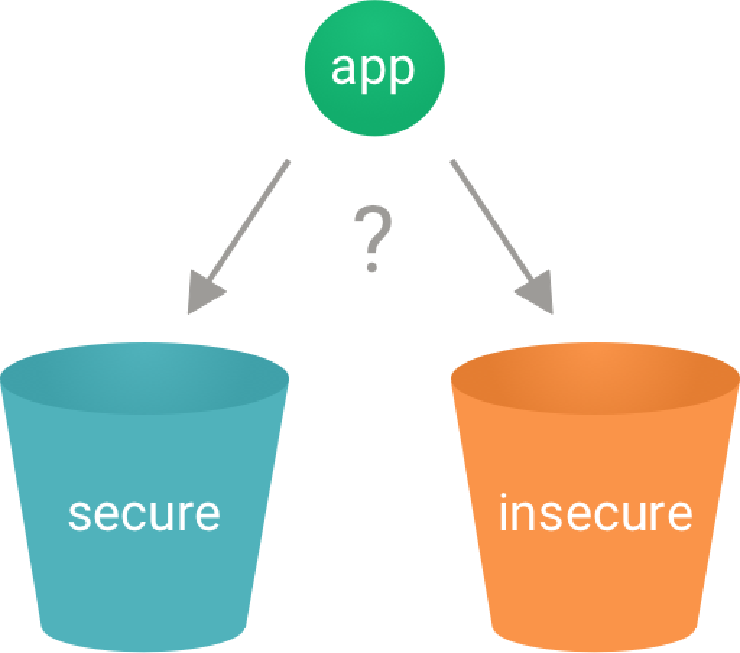

It’s often hard to classify an app as being secure or insecure without additional context about the user and their goals.

It’s often hard to classify an app as being secure or insecure without additional context about the user and their goals.

If you’re a designer, usability researcher, or other UX professional collaborating with a security engineer for the first time, it may be uncomfortable to push back and ask for more information about the app’s security features, especially if the answers are full of unfamiliar acronyms and buzzwords. And it’s tempting as a security expert to say something along the lines of, “trust me; this is secure.” But digging in to this kind of cross-discipline dialogue can be essential for identifying places that users will get tripped up. Talking across the divide can prevent an app that is secure in theory from becoming seriously flawed in practice.

Here are some questions that teams of security experts and UX professionals should be able to answer about the software they are building together. This kind of conversation is best held in front of a whiteboard, where team members can communicate visually as well as verbally. Security experts should challenge themselves to offer complete explanations in accessible terms. Their partners should be vocal when a concept is not clear.

- What is the threat model (probable set of attacks) that the software protects against? Who are the “adversaries” that are likely to try and subvert the software, and what features are in place to protect against them? Include both attackers interested in your infrastructure (either for monetary gain or for the lulz) and in your users’ data (whether they’re harvesting large quantities or carrying out a personal vendetta).

- What threats is the software currently vulnerable to? Don’t forget to consider users who are at risk of targeted attacks, such as domestic abuse survivors, investigative journalists, or LGBTQ activists around the world (one resource to help you get started is our former fellow Gus Andrews’ set of user personas for privacy and security). Remember in particular that some people are targeted by their telecom providers and even their governments.

- How does the UX help users understand both the protections the software offers and the protections it does not offer? Given the people who use the software (or are likely to use it in the future), does the UX do an accurate and reasonable job at conveying how it aims to keep its users secure? For example, if the software advertises encryption, is it truly to inform users or simply to smooth over their fears (pro tip: the phrase “military-grade encryption” is never a good sign for users)? If your chat app’s messages automatically disappear, do people understand that there are ways those messages may still be retained?

- Are there things that users can do – or fail to do – that will expose them to additional risk? Does the UX help shape their behavior appropriately to counter this eventuality? For example, does the app periodically encourage users to protect their accounts with basic features like two-factor authentication? Does it just send two-factor codes by SMS or also support alternatives like app-generated codes or hardware tokens?

This is just a starting point in what we hope will become an ongoing conversation between UX professionals and their security-minded colleagues. Great software is built around excellent user experiences, and keeping users’ data safe is a core (if often implicit) user need. For this reason, we believe designers and UX researchers can be important advocates for user security. Conversations across the divide are an important first step to making this a reality.

Would your team like help talking through these types of issues? We can lend a hand. Drop us a line at [email protected].